Something for (almost) nothing: Improving deep ensemble calibration using unlabeled data, new Arxiv preprint

We present a method to improve the calibration of deep ensembles in the small training data regime in the presence of unlabeled data. Our approach is extremely simple to implement: given an unlabeled set, for each unlabeled data point, we simply fit a different randomly selected label with each ensemble member. We provide a theoretical analysis based on a PAC-Bayes bound which guarantees that if we fit such a labeling on unlabeled data, and the true labels on the training data, we obtain low negative log-likelihood and high ensemble diversity on testing samples. Empirically, through detailed experiments, we find that for low to moderately-sized training sets, our ensembles are more diverse and provide better calibration than standard ensembles, sometimes significantly.

Given an unlabeled set, for each unlabeled data point, we simply fit a different randomly selected label with each ensemble member.

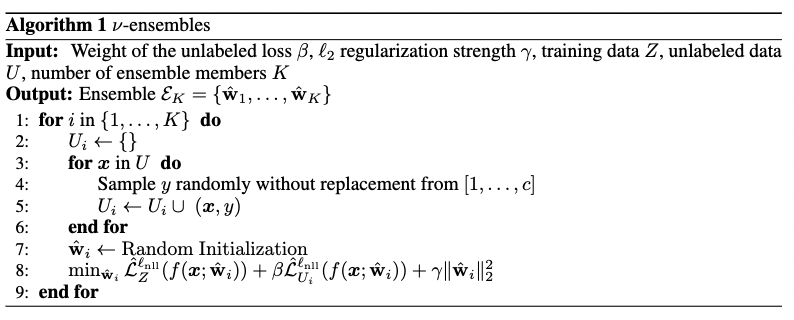

Do you have a small to medium-sized training set together with some unlabeled data? Are you looking for an extremely simple way to train a deep ensemble that has improved calibration than the standard one? We present $\nu$-ensembles an extremely simple way to improve deep ensemble calibration. $\nu$-ensembles are trained based on the following algorithm

At this point the curious reader might enquire “How is it possible to improve testing metrics by fitting random labels?” The crucial point is that for each unlabeled datapoint we exhaustively fit all possible labels by exactly one ensemble member. In this way exactly one ensemble member will learn a useful feature.

How is it possible to improve calibration by fitting random labels? By exhaustively fitting each possible label by exactly one ensemble member we ensure that exactly one ensemble member will learn a useful feature.

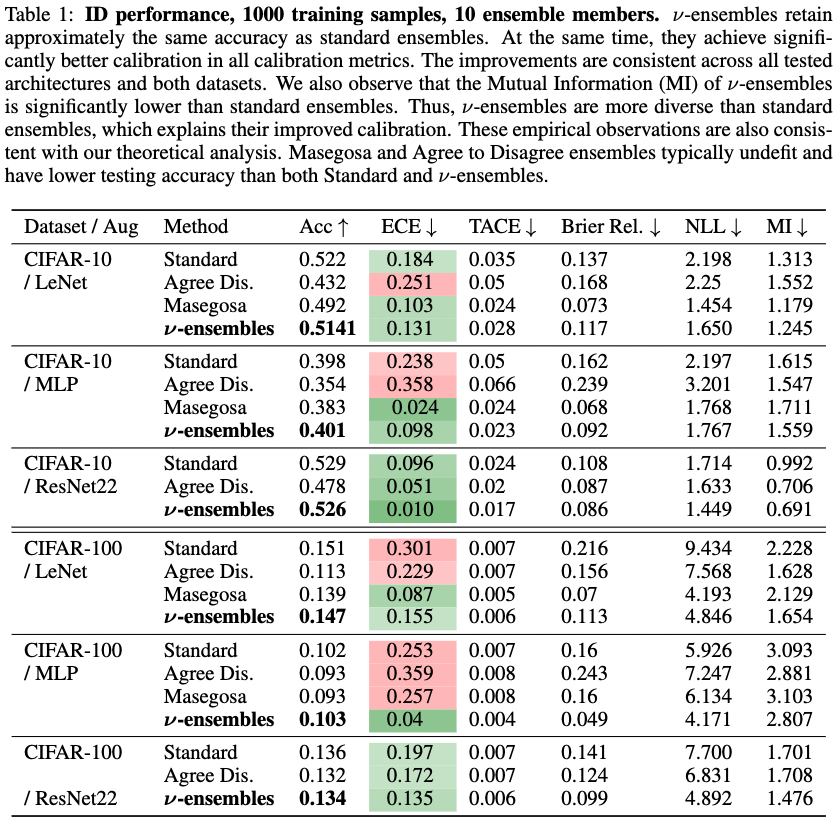

Below we see some results on the CIFAR-10 and CIFAR-100 datasets

We see that $\nu$-ensembles have comparable accuracy to standard ensembles but with significantly better calibration across all calibration metrics. We also see that $\nu$-ensembles achieve significantly higher diversity between ensemble members. These results are consistent across all architectures for both CIFAR-10 and CIFAR-100. For the case of CIFAR-10, we see that the testing accuracy is low, however, this is to be expected due to the small size of the training dataset $Z_{\mathrm{train}}$.

We also compare with Masegosa ensembles and Agree to Disagree ensembles. We see that both Masegosa and Agree to Disagree ensembles tend to underfit the data and have worse testing accuracy than $\nu$-ensembles. In particular, Agree to Disagree ensembles also have in general worse calibration. Masegosa ensembles on the other hand have somewhat better calibration than $\nu$-ensembles in most cases.